Building a Twitch Text-To-Speech app

I've previously been looking at Twitch streaming as a bit of a side hobby, and one of the things that surprised me is the number of paid services for running text-to-speech on your stream's chat.

Ultimately, a simple text to speech system is all I'm after, one that will assign a voice to each person in my stream chat (thankfully I'm not streaming to tens of thousands of people at a time) and read out their messages, saving me from having to alt+tab from the game I'm currently playing.

Looking at products on the market, you normally get charged per month, which isn't economical for a smaller sized stream.

Speech synthesis

In a nutshell, a text to speech app is relatively simple to put together. The SpeechSynthesis API allows you to do text to speech using the 'voices' available on the device, and is widely accepted for use by all major browsers.

If you're looking for a zero cost approach, this is the way to go.

Unfortunately, modern text to speech solutions (especially the paid ones) have far superior speech voices. And if we're going to be supporting streams that can have (theoretically) an infinite number of chatters, we want a solution that has a large range of voices.

Polly is AWS' speech synthesis library. It comes with a wide range of available voices, and has a range of standard and premium voices.

Ideally, we can leverage this as part of our TTS app, to give donators to the stream a higher quality voice.

Polly's pricing is very cost effective, and goes by Amazon's pay-as-you-go model, allowing us to keep costs down until we scale up.

Twitch Chat

tmi.js is available to handle the Twitch chat side of things. It provides a simple way to monitor a stream's chat as shown in the example provided:

const tmi = require('tmi.js');

const client = new tmi.Client({

options: { debug: true },

identity: {

username: 'bot_name',

password: 'oauth:my_bot_token'

},

channels: [ 'my_channel' ]

});

client.connect().catch(console.error);

client.on('message', (channel, tags, message, self) => {

if(self) return;

if(message.toLowerCase() === '!hello') {

client.say(channel, 'username, heya!');

}

});Building a UI

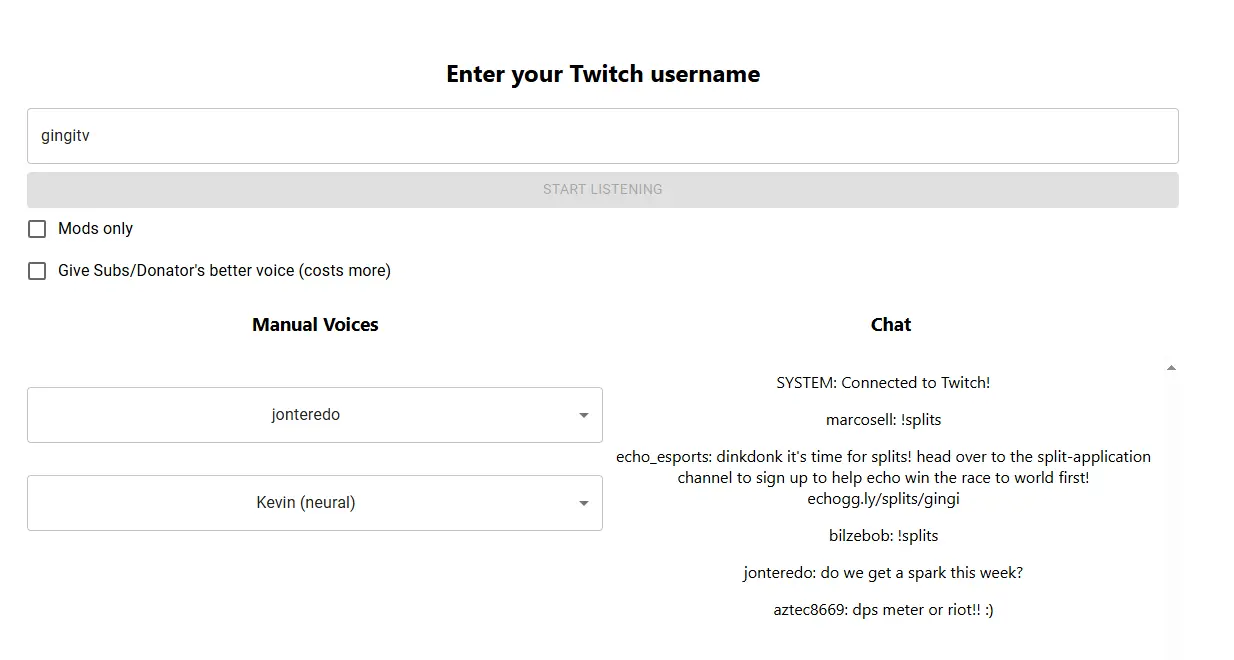

Ideally, we want to be able to do a few things:

- Connect to the Twitch channel provided by the user

- Display each message

And we want to give the user the abilities to:

- See which voice is assigned to a given chatter

- Change which voice is assigned to a given chatter

- Restrict TTS to Moderators or VIP only (when in a large stream)

A simple create-react-app later (I would now recommend something like Next for this, but I started building this six months ago) and we have something.

State

There are a few things we track with State.

The twitch chat itself which is a simple array of TwitchMessage objects, shown below:

const tmi = require('tmi.js');

const client = new tmi.Client({

options: { debug: true },

identity: {

username: 'bot_name',

password: 'oauth:my_bot_token'

},

channels: [ 'my_channel' ]

});

client.connect().catch(console.error);

client.on('message', (channel, tags, message, self) => {

if(self) return;

if(message.toLowerCase() === '!hello') {

client.say(channel, 'username, heya!');

}

});The actual tts app config, by which I mean which tickboxes are checked (ModsOnly, DonatorVoice).

The list of available Polly voices (as we have to perform a DescribeVoicesCommand to list the voices on page load)

And lastly, we have a key value pair called twitchVoices that pairs a twitch username with a Polly voice. This is done to support the ability for the user to change and see which user is assigned to which voice.

Polly

This app uses the AWS SDK to simply make requests to the Polly service, which returns a byte array containing the synthesised speech. That is then stored as a blob, then provided to an Audio instance and played. Once played it is then removed from the DOM.

pollyClient

.send(

new SynthesizeSpeechCommand({

Engine: pollyVoice.SupportedEngines[0],

OutputFormat: "mp3",

Text: message,

VoiceId: pollyVoice.Id,

})

)

.then((output) => {

if (!output.AudioStream) return;

return output.AudioStream?.transformToByteArray();

})

.then((array) => {

if (!array) return;

const audio = new Audio();

var blob = new Blob([array], { type: "audio/mp3" });

var url = window.URL.createObjectURL(blob);

audio.src = url;

audio.play().then(() => audio.remove());

});

};Originally, I had intended on simply creating one Audio instance in the DOM then re-using it per message. Unfortunately so many messages are sent at a time on twitch that the likelihood of a stream receiving another message in the time it takes a given message's audio to finish playing is quite high.

Inserting an Audio per message allows us to play multiple messages at the same time, to keep things as 'live' as possible.

The benefit of playing the audio files directly from the DOM is that someone streaming using a tool like OBS will simply have the audio come through their 'Speaker Audio' without needing to install any extensions or external apps.

Conclusion

What was originally going to just be a simple TTS tool for my own streams is available completely open source.

If you're interested, check out the code on GitHub